,allowExpansion)

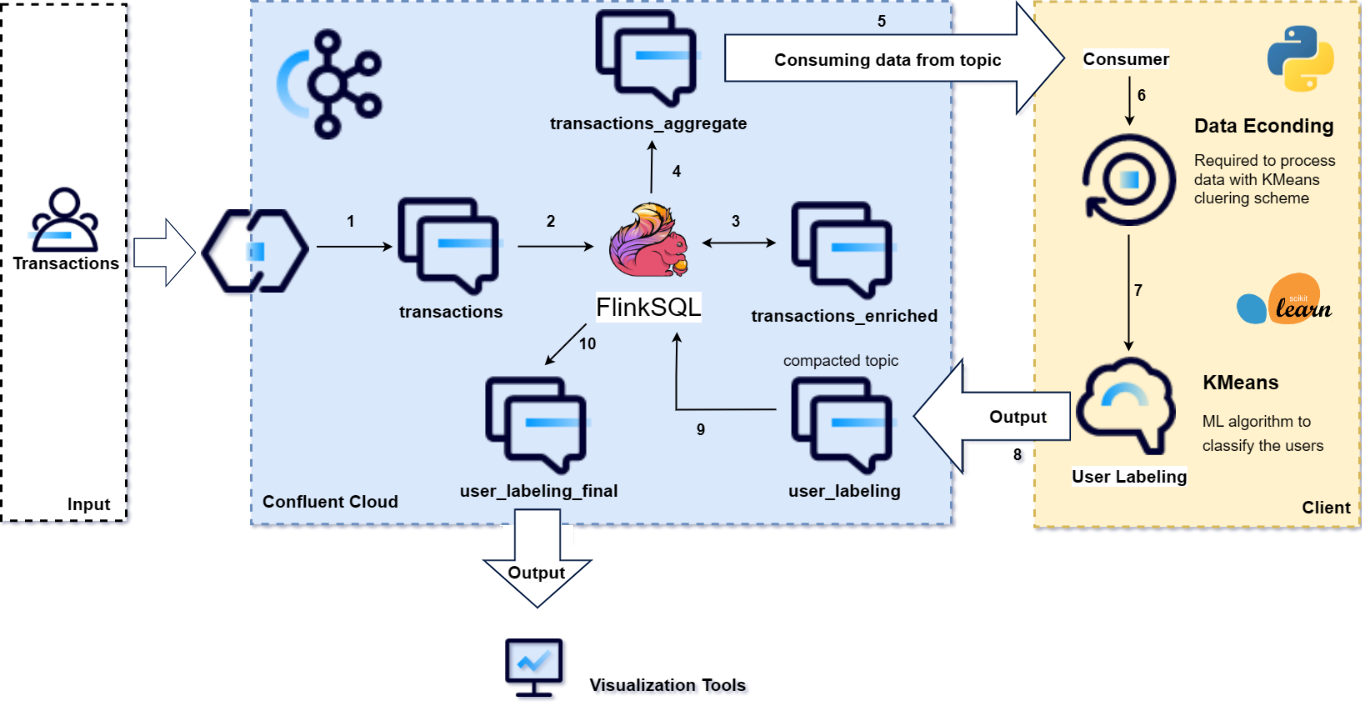

Empower data scientists, marketing managers, and IT decision-makers with a User and Entity Behavior Analytics (UEBA) application, facilitating targeted marketing campaigns fueled by real-time user behavior insights.

Data Reply IT actively participates in the Build with Confluent initiative. Through validation of our streaming-based use cases with Confluent, we ensure that our Confluent-powered service offerings leverage the capabilities of the leading data streaming platform to their fullest potential. This validation process, conducted by Confluent's experts, guarantees the technical effectiveness of our services.

Curious for more information? Click on the GitHub link to learn more about the hands-on project

Confluent

)

Confluent is the data streaming platform that is pioneering a fundamentally new category of data infrastructure that sets data in motion. Confluent's cloud-native offering is the foundational platform for data in motion – designed to be the intelligent connective tissue enabling real-time data, from multiple sources, to constantly stream across the organization. With Confluent, organizations can meet the new business imperative of delivering rich, digital front-end customer experiences and transitioning to sophisticated, real-time, software-driven backend operations.